Cyber attackers are leveraging the power of artificial intelligence (AI) to boost their chances of success in email-based attacks. AI tools can help them to develop and launch more attacks, more frequently, and to make these attacks more evasive, convincing, and targeted. But to what extent are they doing these things? Learn this and more in this Threat Spotlight.

Cyber attackers are leveraging the power of artificial intelligence (AI) to boost their chances of success in email-based attacks. AI tools can help them to develop and launch more attacks, more frequently, and to make these attacks more evasive, convincing, and targeted. But to what extent are they doing these things? Learn this and more in this Threat Spotlight.

Determining whether or how AI has been used in an email attack is not always straightforward, and this makes it harder to see what is really going on under the hood. We believe that to build effective defenses against AI-based email attacks, we need to have a better understanding of how attackers are using these tools today, what they are used for, and how that is evolving.

There are numerous reports about how cybercriminals are using generative AI to fool their adversaries, but there is limited hard data on how attackers are using such tools to increase the efficiency of their attacks.

To find some answers, a group of researchers from Columbia University and the University of Chicago collaborated with Barracuda to analyze a large dataset of unsolicited and malicious emails spanning from February 2022 to April 2025.

Detecting the use of AI

Our research team trained detectors to automatically identify whether a malicious/unsolicited email was generated using AI.

We achieved this by assuming that emails sent before the public release of ChatGPT in November 2022 were likely to have been written by humans. This allowed us to establish a reliable ‘false positive’ for the detector.

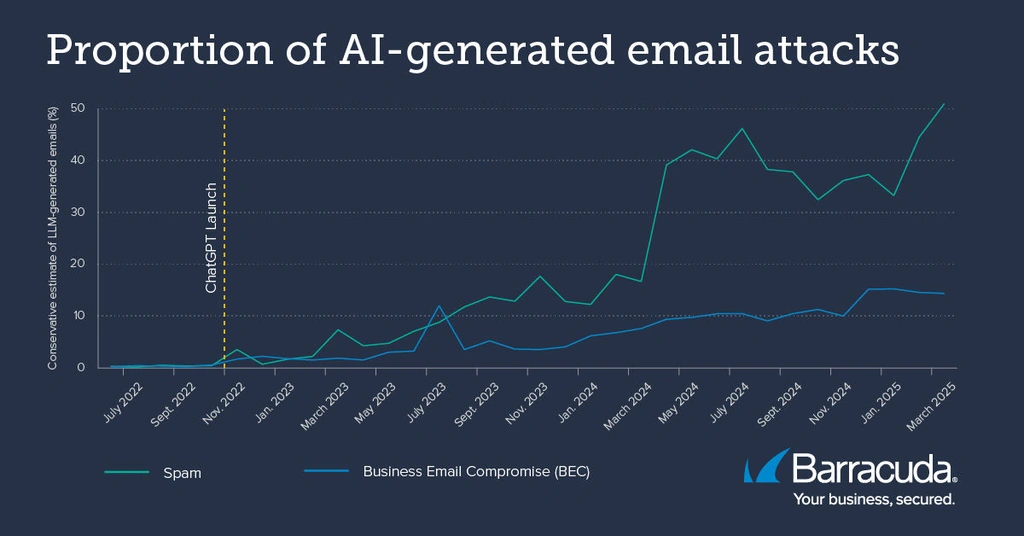

Running the full 2022 to 2025 Barracuda dataset through the detector reveals a steady–but very different—increase in AI-generated content in spam and business email compromise (BEC) attacks after the release of ChatGPT.

AI helps to swamp inboxes with spam

Spam showed the most frequent use of AI-generated content in attacks, significantly outpacing its use in other attack types over the past year. By April 2025, most spam emails (51%) were generated by AI, rather than humans. The majority of emails currently sitting in the average junk or spam folder are likely to have been written by a large language model (LLM).

In comparison, the use of AI-generated content in BEC attacks is increasing much more slowly. BEC attacks involve precision: They typically target a senior person in the organization (e.g., the CFO) with a request for a wire transfer or a financial transaction. The analysis showed that by April 2025 14% of BEC attacks were generated by AI.

Attackers’ motives for using AI

We also explored attackers’ motivation for using AI to generate attack emails by analyzing the content of AI-generated emails.

AI-generated emails typically showed higher levels of formality, fewer grammatical errors, and greater linguistic sophistication when compared to human-written emails. These features likely help malicious emails evade detection systems and appear more credible and professional to recipients. This helps in cases where the attackers’ native language may be different from that of their targets. In the Barracuda dataset, most recipients were in countries where English is widely spoken.

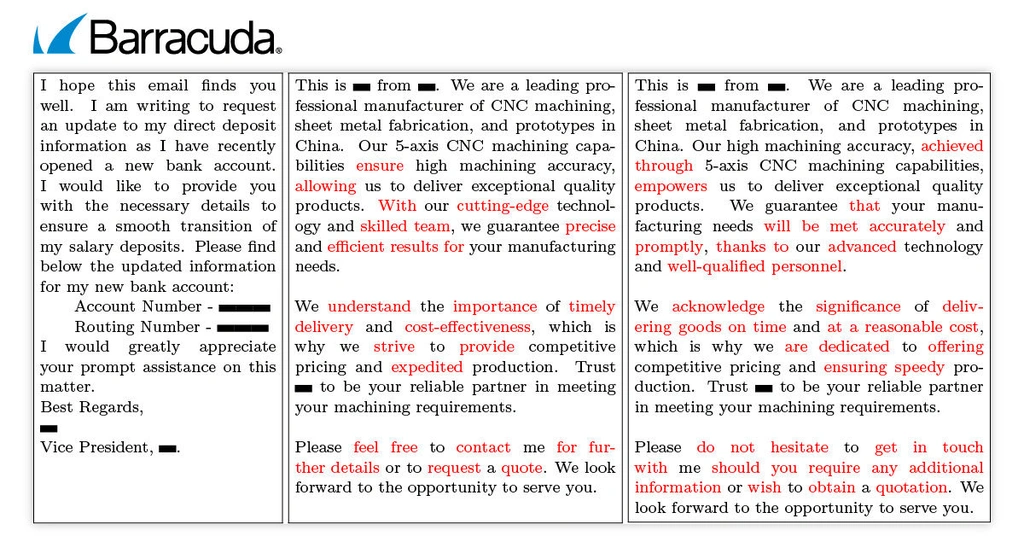

Attackers also appear to be using AI to test wording variations to see which are more effective in bypassing defenses and encouraging more targets to click links. This process is similar to A/B testing done in traditional marketing.

Examples of emails detected as LLM-generated. The first one is a BEC email. The second and third are spam emails. The spam emails seem to be reworded variants, with differences shown in red.

Our team’s analysis shows that LLM-generated emails did not significantly differ from human-generated ones in terms of the sense of urgency communicated. Urgency is a deliberate tactic commonly used to exert pressure and elicit an instinctive response from the recipient (e.g., “click this button now!” or “urgent wire transfer”).

This suggests that attackers are primarily using AI to refine their emails and possibly their English rather than to change the tactics of their attacks.

How to protect against email attacks created with AI

The research is ongoing as the use of generative AI in email attacks continues to evolve, helping attackers to refine their approach and make attacks more effective and evasive.

At the same time, AI and machine learning are helping to improve detection methods. That’s why an advanced email security solution equipped with multilayered, AI/ML-enabled detection is crucial.

Education also remains a powerful and effective protection against these types of attack. Invest in security awareness training for employees to help them to understand the latest threats and how to spot them, and encourage employees to report suspicious emails.

This Threat Spotlight was authored by Wei Heo with research support from Van Tran, Vincent Rideout, Zixi Wang, Anmei Dasbach-Prisk, and M. H. Afifi, and professors Ethan Katz-Bassett, Grant Ho, Asaf Cidon, and Junfeng Yang.

This article was originally published at Barracuda Blog. Learn more about current threat trends by reviewing past Threat Spotlight articles.

Photo: fizkes / Shutterstock

This post originally appeared on Smarter MSP.